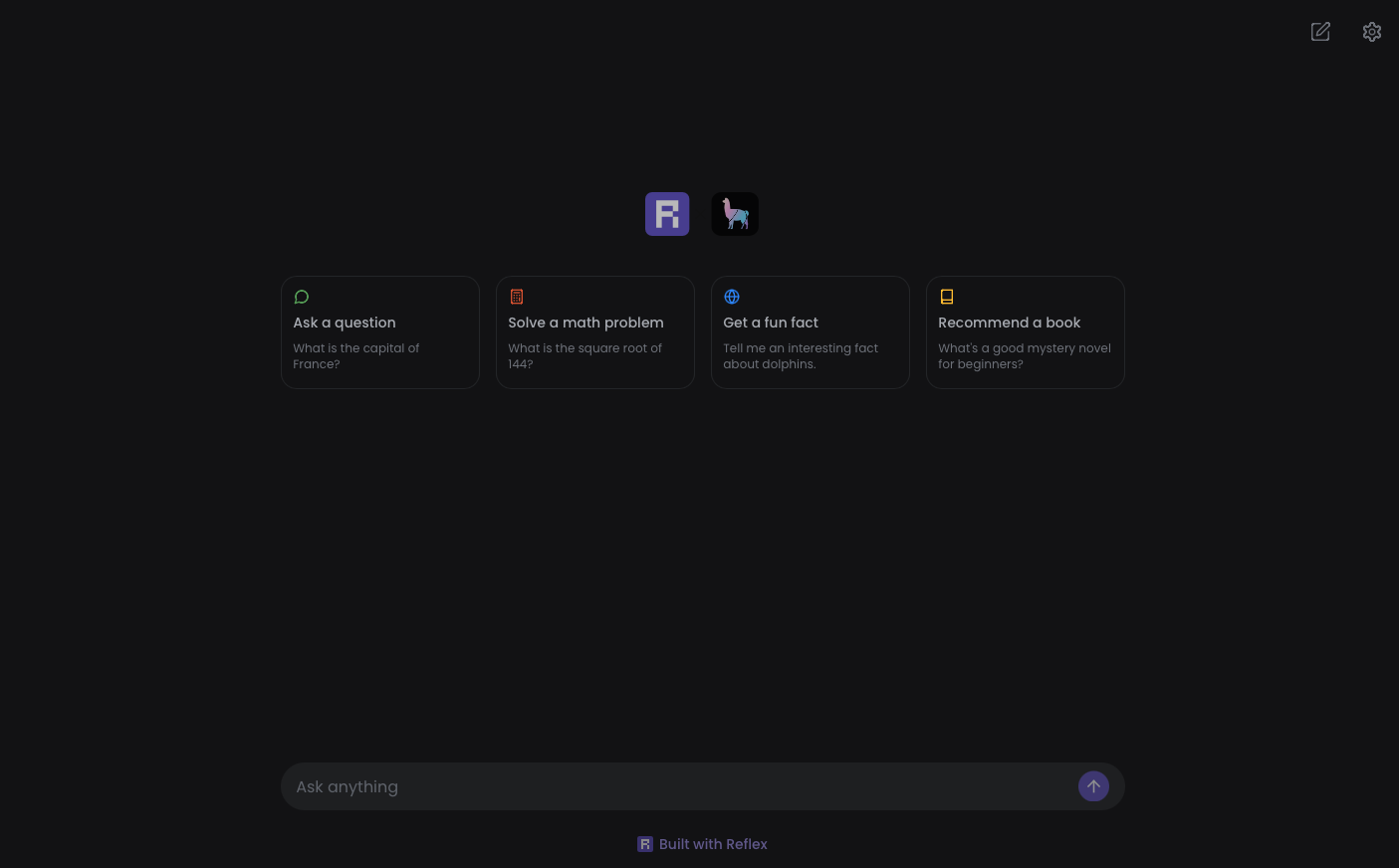

LLamaIndex App

A minimal chat app using LLamaIndex

The following is an alternative UI to display the LLamaIndex app.

If you plan on deploying your agentic workflow to prod, follow the llama deploy tutorial to deploy your agentic workflow.

To run this app locally, install Reflex and run:

The following lines in the state.py file are where the app makes a request to your deployed agentic workflow. If you have not deployed your agentic workflow, you can edit this to call and api endpoint of your choice.

Once you have set up your environment, install the dependencies and run the app:

More Templates

View All